Small LLMs: Why Businesses Will Choose Lean Over Large

Introduction For the last few years, the conversation around artificial intelligence has been dominated by bigger is better. Large language models promised higher accuracy, br...

Introduction

For the last few years, the conversation around artificial intelligence has been dominated by bigger is better. Large language models promised higher accuracy, broader knowledge, and more impressive demonstrations. However, in 2026, the focus is shifting. Businesses are realizing that value does not come from size – it comes from fit.

This is where small and specialized large language models (Small LLMs) enter the picture. Instead of relying on massive, expensive, and general-purpose models, companies are choosing leaner, domain-specific models that are faster, cheaper, easier to control, and easier to integrate into real business workflows.

This article explains what Small LLMs are, why they are gaining momentum, and how they deliver more business value than their oversized counterparts.

What Are Small LLMs?

Small LLMs are compact language models that are:

- Trained on smaller, curated datasets

- Often fine-tuned for specific domains or tasks

- Designed to run efficiently on private or edge infrastructure

- Optimized for speed, cost, privacy, and control

While large foundation models may contain hundreds of billions of parameters, Small LLMs typically range from a few million to a few billion parameters – enough to perform well on targeted use cases without unnecessary complexity.

Think of large models as encyclopedias and small models as expert handbooks. Both have value, but businesses usually need precision, not encyclopedic breadth.

Why Businesses Are Moving Toward Smaller Models

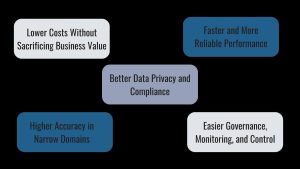

1. Lower Costs Without Sacrificing Business Value

Large LLMs are expensive to:

- Host

- Query at scale

- Fine-tune

- Secure

Small LLMs dramatically reduce:

- Compute costs

- Inference latency

- Energy consumption

- Infrastructure requirements

This makes AI projects financially sustainable, especially for mid-sized companies or products with high user volumes.

2. Faster and More Reliable Performance

Smaller models:

- Respond faster

- Require fewer resources

- Are easier to scale horizontally

This matters for real-time use cases like:

- Customer support automation

- Internal assistants

- Monitoring systems

- IoT and edge devices

- Industrial software interfaces

Latency becomes a business risk when AI is embedded into operational systems. Small LLMs reduce that risk.

3. Better Data Privacy and Compliance

Many companies operate in regulated industries such as healthcare, finance, manufacturing, or government. Sending sensitive data to external AI APIs creates legal and reputational risks.

Small LLMs can be:

- Deployed on-premise

- Run inside private clouds

- Fully isolated from third-party platforms

This gives companies full control over their data, which is essential for compliance with GDPR and industry-specific regulations.

4. Higher Accuracy in Narrow Domains

Large models are generalists. They know a little about everything. Small models are specialists.

When trained or fine-tuned on:

- Internal documentation

- Product data

- Industry-specific language

- Technical manuals

- Customer interaction history

They often outperform large models in those specific domains. For example:

- A Small LLM trained on medical terminology can outperform a general model in healthcare support.

- A Small LLM trained on manufacturing processes can provide more accurate operational guidance.

- A Small LLM trained on internal policies can become a reliable compliance assistant.

5. Easier Governance, Monitoring, and Control

Smaller models are easier to:

- Audit

- Explain

- Monitor

- Update

- Roll back

This is critical as AI becomes part of core business logic rather than just an experimental feature. Businesses need AI they can govern, not AI they merely consume.

Where Small LLMs Create the Most Value

Small LLMs are particularly effective in:

- Internal knowledge assistants

- Customer support automation

- Sales enablement tools

- HR and onboarding systems

- Compliance and policy checking

- Engineering and DevOps copilots

- Manufacturing and logistics optimization

- Healthcare and clinical documentation

- Financial reporting and risk analysis

In each of these cases, the model does not need to know the entire internet. It needs to understand a specific language, context, and workflow.

Lean AI Is More Sustainable AI

Beyond business efficiency, Small LLMs also support sustainability goals:

- Lower energy consumption

- Smaller carbon footprint

- Less hardware demand

This aligns with growing expectations for responsible and sustainable technology adoption.

How InStandart Helps Businesses Implement Small LLMs

At InStandart, we help organizations move from AI experimentation to practical AI implementation. Our approach focuses on:

- Identifying the right AI use cases with measurable business value

- Selecting or building domain-specific Small LLMs

- Fine-tuning models on internal data securely

- Integrating AI into existing software systems

- Ensuring privacy, compliance, and scalability

Instead of deploying AI for the sake of innovation, we design AI solutions that solve real operational problems.

Conclusion

The future of business AI is not about the biggest models. It is about the right models.

Small LLMs offer:

- Lower costs

- Faster performance

- Better privacy

- Higher relevance

- Easier governance

They turn AI from a technological showcase into a reliable business tool. As companies move from experimentation to execution, lean, focused, and controllable AI will win over massive, opaque, and expensive systems.